Author : Jamie Marraccini – VP of VIAVI Solutions’ Inertial Labs division

22 July 2025

Figure 1: A comparison of gyroscope technologies, showing how bias and scale-factor stability determine their role in navigation, from simple motion detection (MEMS) to full inertial navigation (RLG)

Autonomous navigation is the key ingredient for a growing range of applications, from unmanned aerial vehicles (UAVs) and other forms of robots right through to systems used for surveying/mapping purposes. Their ability to follow courses and reliably map objects around them will almost always involve access to the signals sent by the constellations that deliver global navigation satellite system (GNSS) services.

It is hard, however, to make certain that

GNSS reception will always be possible during operations. A receiver that uses GNSS alone needs to ensure line-of-sight access to the relatively low-power RF signals from orbiting satellites. The weakness of such signals means they are easily susceptible to jamming by hostile operators or even inadvertently through interference from badly designed equipment. For these reasons, any system needing reliable navigation must have at least one alternative to GNSS. The addition of each alternative source will further improve system reliability in denied, degraded and disrupted space operational environment (D3SOE) situations.

Inertial sensors

Inertial sensing devices, such as accelerometers and gyroscopes built into the system, will deliver sources of orientation and direction data that are always available. But designers need to be mindful of the characteristics of these sensors, as they will have large impacts on navigational reliability. Historically, such devices were comparatively large and heavy, such as ring laser gyroscope (RLG) implementations. Even some of the novel concepts being trialled for accelerometer design, such as cold-atom interferometry, require a large amount of support equipment – thereby increases their size and weight to the point where they can only be practically used on ships and large aircraft.

The arrival of micro-electro-mechanical system (MEMS) technology made it possible to incorporate inertial navigation into the smallest UAVs and robots. These sensors have a high value, not just for direction-finding, but for stabilisation too. Their ability to capture fast changing motions provides the system with better data to help correct the inputs from cameras and other sensors that are used to locate targets. Whether using MEMS technology or based on other mechanical structures, the core concept behind the 2 forms of inertial sensor remains the same. The theoretical accelerometer places a proof mass between 2 flexible or sprung elements that are fixed at either end to the sensor housing. This lets the proof mass move back and forth along the axis of the 2 springs. Changes in acceleration lead to displacement of the proof mass. The size of this displacement provides a readout of the net acceleration. MEMS devices typically place the small proof mass on a beam that acts as the springs, using a design that constrains movement to be primarily along the measurement axis.

Whereas conventional gyroscopes use masses that are allowed to spin, MEMS versions use the properties of vibrating elements to detect how the system they are in is rotating. Changes in vibration frequency caused by the Coriolis effect indicate the rotational velocity of the system. Integrating these readings provides the ability to estimate position.

Drift

Without recalibration from GNSS or another absolute positioning signal source, these inertial sensors will encounter a build-up of errors over time. A key source of error is the bias of the sensor, which represents the tendency of the output to deviate in a consistent direction when the physical input is zero. In consumer-level MEMS devices, this bias can lead to large cumulative errors. A gyroscope might demonstrate 100° deviation from the correct angle after an hour.

Random-walk deviation is another error type, and happens because the system must constantly add up sensor measurements to calculate velocity, orientation and distance. As with bias, the tiny, random noise in each measurement gets added up, causing the final calculation to drift unpredictably from the true value. Changes in temperature and vibration provide further sources of error.

Figure 2: An Inertial Labs MEMS sensor for enabling D3SOE navigation

The degree to which these errors affected MEMS devices in the past resulted in implementation focusing on consumer/industrial-level applications, often designed on the assumption that loss of GNSS would be short-lived. A succession of design modifications to help isolate the structures from vibrational forces and reduce bias, combined with manufacturing process improvements, have led to dramatic improvements in performance over the past decade. With the calibration and manufacturing expertise offered by specialists, MEMS devices can now realistically handle tactical-grade navigation (where, for example, rotational drift needs to be as low as 1°/hour). The question for the integrator is the degree to which the system needs to rely on a certain quality grade of inertial sensing.

Every project has its own concept of operations – what the system needs to accomplish, assumptions about its operating environment, plus the levels of performance that each component requires. It is a complex picture and specific to each system, with changes to the operating environment potentially leading to completely different design strategies. For example, if GNSS is likely to be denied by deliberate jamming rather than the system operating in areas where constant line-of-sight is difficult to guarantee, then a different design strategy may be needed. It could prove advantageous to design an RF receiver array that can cope with highly degraded GNSS signals, rather than relying more heavily on alternative forms of navigation.

Degree of accuracy

The length of time over which a system needs to operate will play an important role. For short missions of a few minutes, it is possible to place greater reliance on the inertial sensors, particularly if they are of tactical-grade quality, as there will not be enough time for the cumulative errors to cause a critical deviation in course. Many times, the most appropriate choice will be to employ the hybrid strategy, where a high-accuracy inertial-sensor unit is combined with other sensor inputs.

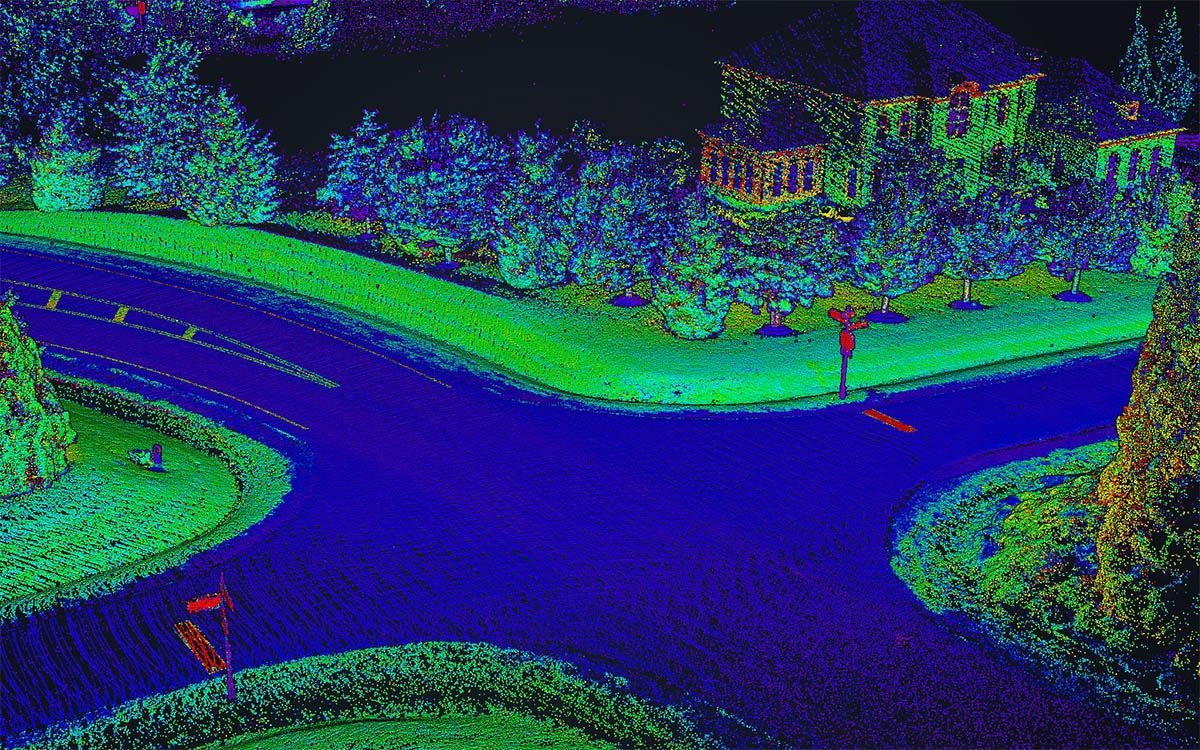

If the system can turn to other external sources of location data, it can correct its internal positioning model effectively enough to rely on a lower level of inertial data quality. But if the system needs to navigate under dense foliage or in deep canyons, other forms of sensor (such as cameras) may be needed to correct the model. One way to improve positioning accuracy is to add support for alternative satellite constellations operating in lower orbits than GNSS. These are growing in number and capability. In recent years, Iridium added a time-and-location capability to its satellite transmissions, which are 1000x more powerful than those provided by GNSS. Researchers have proposed extensions to some of the other low-earth orbit (LEO) constellations that could deliver similar precision at low cost. There remains some susceptibility to jamming, though the higher RF power of the signals makes this more difficult for an adversary to achieve. It is, however, harder still to interfere with built-in cameras or LiDAR sensors. If the system is primarily navigating over land, a downward-facing camera provides the ability to perform reliable odometry by letting software track the relative movement of fixed objects. LiDAR provides a similar capability in which software can detect the changes in relative position of shapes in the reflected point cloud as the system passes fixed structures.

The need for sensor fusion

As already highlighted, maintaining robust navigation in D3SOE environments cannot rely on a single technology. Sensor fusion software allows the integration of data from these and other sensors to build a more complete picture of the movement of the system. Admittedly, sensor fusion, and its importance in minimising the drift of individual MEMS sensors, deserves an article in its own right. However, it is worth briefly highlighting here the key system design considerations needed for effective implementation.

Given the many choices over data sources that can be used for navigation, interoperability is a vital consideration when selecting a solution. Furthermore, as developers want the ability to extract output data in forms that they can process and use for sensor fusion most easily, a solution that conforms to a common set of output protocols also helps ease problems caused by supply chain failures. Devices that can easily be substituted provide the means for manufacturers to navigate their way around component shortages and delays.

In a market environment where reliable navigation is increasingly essential, inertial sensors play an important role. But an understanding of the requirements of each system and the contribution made by other sensors, from cameras to satellite receivers, will ensure the best results.